- Excelling With DynamoDB

- Posts

- 5 Common Mistakes When Using DynamoDB Transactions

5 Common Mistakes When Using DynamoDB Transactions

And how to avoid them

Welcome to the 37th edition of Excelling With DynamoDB.

In this week’s issue, i’m going to discuss 5 common mistakes developers make when working with transactions in DynamoDB - and how to best avoid them.

DynamoDB transactions can be tricky.

When I first started working with them I made many mistakes.

But now after years of working with transactions, I’ve learned the best practices and what to avoid.

So I wanted to share some of the most common mistakes I’ve seen developers make with DynamoDB transactions.

High-level overview of a DynamoDB transaction

1. Over-using transaction

This is by far the most common pitfall and the easiest to remedy.

It’s tempting to wrap everything in a transaction “just to be safe”.

However, this comes at a cost.

Each transaction operation uses twice the write capacity of a non-transactional write and costs more.

This is because, under the hood, DynamoDB has to perform two reads or writes: one for the preparation phase and another for the commit phase. [1].

You should typically reserve transactions for use cases when you want 2 or more items to succeed or fail together.

For better efficiency, evaluate when a conditional expression is sufficient.

2. Not Planning for Transaction Limits

DynamoDB supports a maximum of 25 items in a single transaction.

Additionally, the total size of all items in the transaction cannot exceed 4MB.

Planning your queries and writes for these limits is important otherwise your transactions will fail.

If you need to update or write more than 25 items atomically, consider breaking down the operation into smaller chunks of items.

You can also perform multiple parallel transactions where each transaction has 25 items being updated in order to bypass this limit.

However, it is important to remember that you cannot cross-update any item otherwise the transactions will fail.

3. Ignoring TransactionCanceledException

If you ignore the previous rule of cross-updating one or more items in multiple ongoing transactions, the operation will fail with a “TransactionCanceledException”.

To prevent this error, avoid updating an item across multiple parallel transactions.

The other reasons a transaction can return a TransactionCanceledException are:

condition check failures

provisioned throughput exceeded

Always check the reasons for the cancellation in your error handling and implement retry logic based on the reason for the TransactionCanceledException.

4. Not Understanding Transaction Isolation

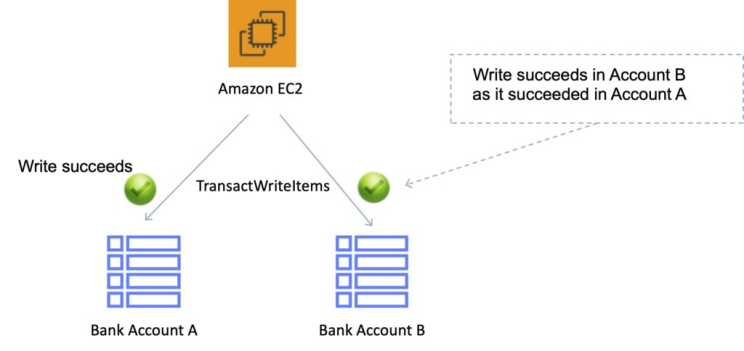

DynamoDB transactions provide serializable isolation, not read-repeatable isolation. [1]

This means that while a transaction is running, other operations can read the old state of the data.

This can cause you to wonder why some requests can read old data while a transaction is in progress.

To remedy this, you can use strong consistency for reads during a transaction by using transactional reads instead of a normal read.

5. Ingesting Data With Transactions

You should avoid using transactions for ingesting data in bulk.

For bulk writes, it’s better to use BatchWriteItems.

BatchWriteItem is optimized for throughput and much more resilient for bulk operations. A BatchWriteItem operation will return an unprocessedItems key with items that were not written, allowing you to retry those specific items only.

There are several best practices around transactions laid out by AWS that you can learn about here.

Conclusion

Transactions in DynamoDB are powerful tools but require careful consideration.

The key is to understand your use case find the right balance and know their limitations and costs.

By understanding these common mistakes in transactions you are already equipped with the knowledge to build better applications with DynamoDB.

👋 My name is Uriel Bitton and I hope you learned something in this edition of Excelling With DynamoDB.

📅 If you're looking for help with DynamoDB, let's have a quick chat.

🙌 I hope to see you in next week's edition!